Site reliability is generally talked about less often than other performance indicators, like page speed. With many web hosts offering multiple 9’s of guaranteed uptime, it’s become less of a ‘hot’ topic and more of the norm that your site will be up 99.99x percent of the time.

But what many people don’t realize is that there’s more to reliability than uptime.

One of the most important pieces of the reliability puzzle is Data Reliability, which generally refers to the protection of both your database and your site files. Posts and pages are often the most important part of WordPress, so it makes sense that protecting both should be a priority.

There are situations, both catastrophic (think natural disaster in the region where a data center is located) and more common, day to day occurrences, that can endanger your data. Your host’s configuration plays a massive role in how you are protected from both. A few minutes invested into ensuring your host has adequate data reliability protections in place can prevent a disaster.

Let’s explore how server configurations can help or hinder Data Reliability, any trade-offs those optimizations can produce, and how to find the right balance between data reliability and other performance factors based on your organization’s needs.

The Container Model

The Container Model is fairly straightforward: all site files and the database live on the same machine, allowing for simpler management and slightly lower latency between assets, like the site files and the database. But when something goes wrong on that single machine, you can imagine how a small issue can escalate into a larger one.

We’ve seen cases where sites hosted on containerized platforms (that are hosted on other platforms) experience a spike in traffic, PHP uses all the system memory, and then the machine locks up, corrupting the database tables. On a larger site, that could mean an hour or two of recovery time before they’re back up. They may have lost data, not to mention lost business, in that case. If Google, or another search engine, attempts to index your site during this downtime, it could impact your site’s ranking because they do not want to serve unreliable sites to their users.

Downtime + lost data = risky business

This risk becomes even more significant when other sites are sharing those same resources, and backups are stored on the same machine.

The Dedicated Resources Model

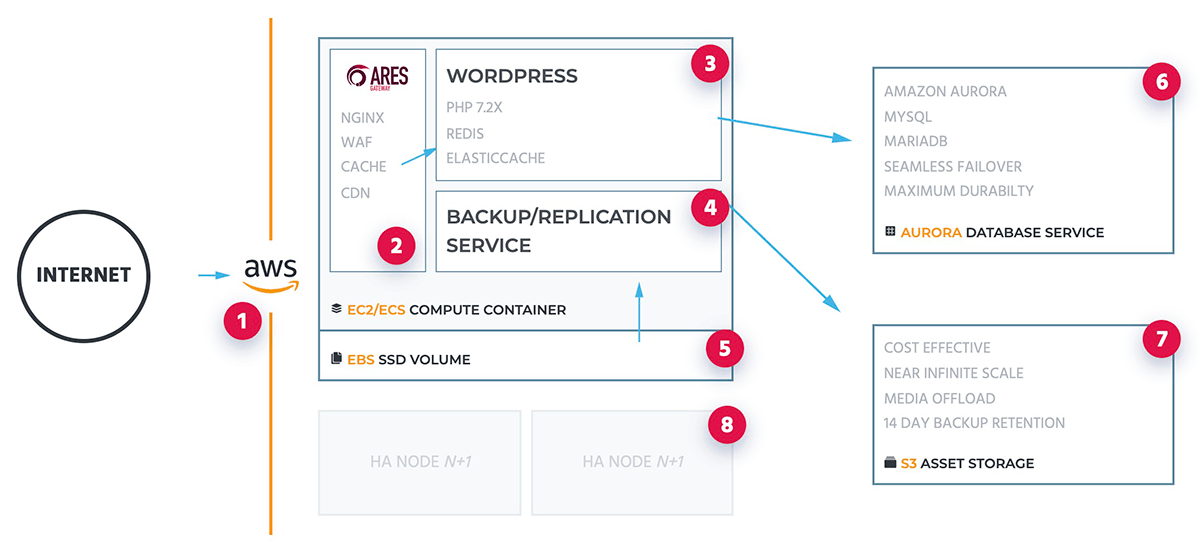

The alternative to the grouped, container model is what we call the Dedicated Resources model. By keeping the database, code, and backups on separate, dedicated server instances, we’re ensuring that any failure or issue with one asset has minimal impact on the remaining assets. This approach creates a superior configuration compared to sharing the same server, in terms of both data reliability and performing at scale.

If the Dedicated Resources approach makes sense to you, these are the questions to ask of your current and potential hosting solutions:

- Are there separate resources dedicated to your code and your database?

- What deliberate hardware decisions have they made to protect your data?

- How do they manage backups and recovery? Are backups stored separately?

If they aren’t actively addressing these issues, you could be looking at not only potential downtime and data loss, but scalability challenges as you grow.

The Trade-off

We’ve established that having the database and app on separate server instances (separate virtual machines) is ideal for overall reliability, but this server utopia comes at a cost: latency.

Because the resources are separated, this configuration does result in slightly higher latency between the two resources, and this impacts page speed.

But the real-world performance difference caused by this latency is negligible (typically in the 1-2 millisecond range,) as long as there are no major issues with the site code or plugins.

This is where the power of a reliable managed hosting partner comes into play – to find and help you fix problematic code issues. It’s something that Pagely does for every customer, and this is a level of service most other companies do not offer.

How Important is Data Reliability to You?

Depending on the complexity of your WordPress website and the requirements of your business, the answer here is usually ‘very important.’ With that answer in mind, it’s a good idea to ask your team the following questions to help you determine where your priorities lie:

- Do we have noticeable performance issues – and if more hardware isn’t the answer, are we willing to address the root cause of those issues?

- Can we quantify the value of our data reliability and uptime?

- Are things like page speed empirically more or less valuable to us than data reliability, scalability, and flexibility?

- Can we achieve both (better performance and data reliability) at above-acceptable levels with our current provider?

That last question is at the heart of what we’ve dedicated the last decade towards solving at Pagely.

Yes, we’ve made hard-but-smart decisions around the trade-offs that increase reliability within WordPress. And in doing so, we’ve balanced all key performance and stability metrics into one elevated metric that larger organizations strive to achieve: scalability.

By working with your team to improve your code and customize your server configuration to match your specific needs, we’ve created real-world solutions that can scale as you grow.